NotebookLM’s Latest Update Speeds Up Replies By Up To 40%, And It’s All Thanks To One Minor Change

Sign in to your Android Police account

Summary

- NotebookLM now streams answers in real-time, cutting waiting time by 30-40%.

- The update doesn’t affect the use of thinking models; instead, it enhances response flow by gradually displaying answers as they’re generated.

- At Google I/O 2025, Google also announced customizable Audio Overviews and teased upcoming Video Overviews.

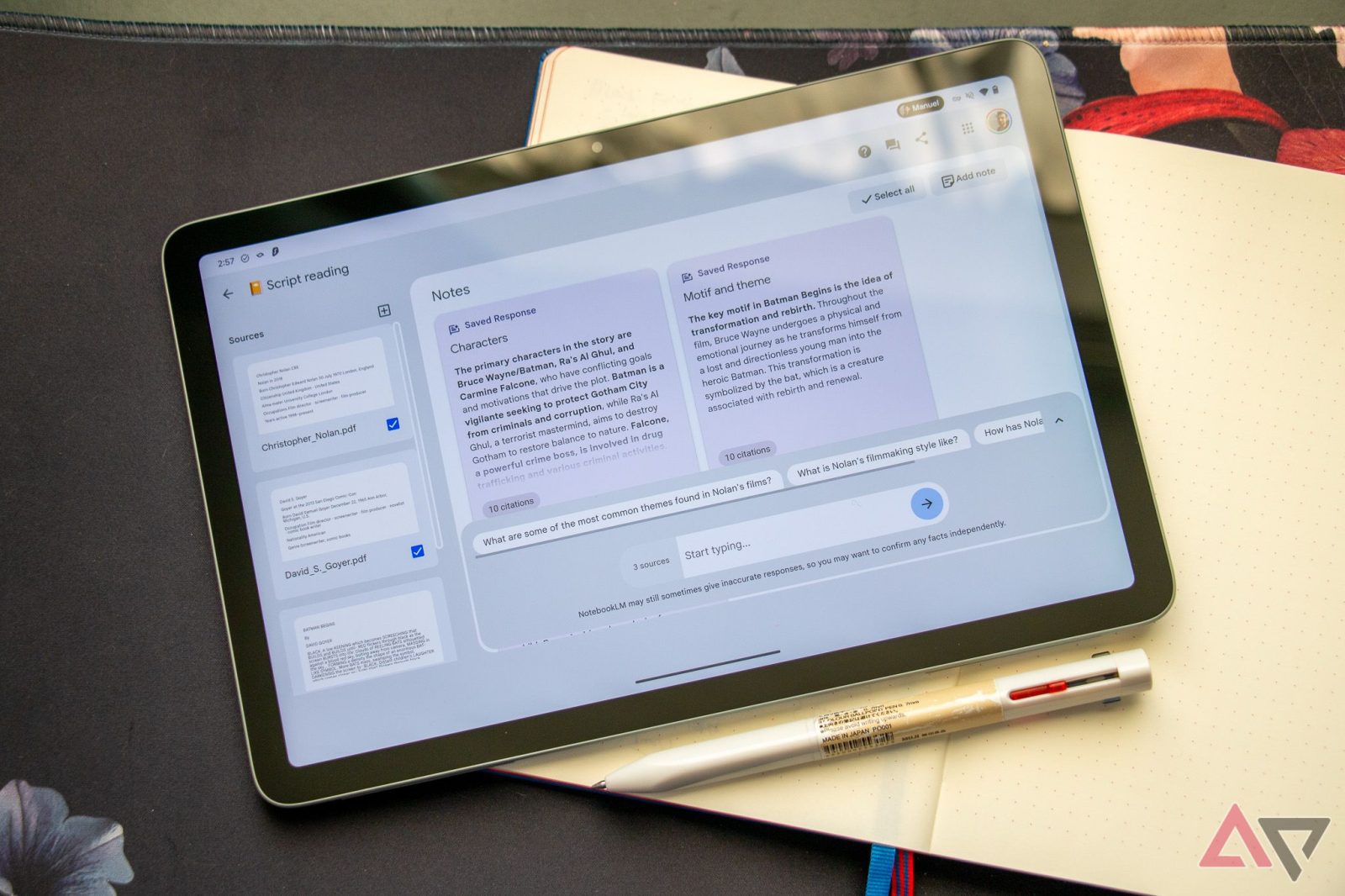

Google’s AI-powered research assistant, NotebookLM, is easily one of the best uses of AI. It’s practical, packs a ton of incredible features in its free plan (with reasonable limits), and rarely hallucinates since the language model is grounded in the sources you add to your notebooks.

Up until a few days ago, the research tool was limited to a website only and didn’t have a mobile app. The tool’s iOS and Android apps officially made their debut right before Google I/O 2025. Unfortunately, the app didn’t entirely live up to my expectations since it lacks most NotebookLM features like Mind Maps and content generation. But Google just made a minor yet much-needed tweak to NotebookLM that might’ve just made up for it.

Related

NotebookLM will now make you wait less for answers

NotebookLM announced via a post on its X (formerly Twitter) account that when you now ask questions in the Chat panel, the reply will appear as it’s generated.

Before this update, the language model would take a few seconds (or more than a minute depending on the query) to answer any questions you had. The issue was, the answers would appear all at once when they were fully generated. NotebookLM’s now shaking things up, and the answers to your queries will appear line by line instead.

Simon Tokumine, NotebookLM’s lead, mentioned in another X post that NotebookLM has begun to take “a little more time” to generate answers since they moved to thinking AI models.

With this change, he claims they’re cutting waiting time down by 30 to 40%. This change doesn’t mean NotebookLM isn’t using thinking models anymore. It’s just that the response is being streamed in real-time instead of showing up all at once.

Google also announced a few other updates for NotebookLM during Google I/O 2025. Firstly, you’ll now have the option to choose between a Shorter or Longer Audio Overview. So, when you need a really quick overview of your sources and have a notebook with tons of material, instead of having to listen to a relatively long podcast, you can nudge the tool to make it shorter for you. Similarly, when you’d like to dive deeply into a topic, you can choose to generate a longer Audio Overview.

NotebookLM also confirmed the rumors swirling around about Video Overviews coming to the tool following the success of Audio Overviews. Frankly, I was skeptical about them, since I’m not the biggest fan of AI-generated videos (and content) in general. However, the preview they uploaded changed my mind completely, and now I can’t wait for the feature to go live.

Nonetheless, though results appearing in real-time rather than all at once is a relatively minor update compared to what they announced at Google I/O, it’s the little improvements like these that truly add up and make a difference in the long run.

What’s your reaction?

Love0

Sad0

Happy0

Sleepy0

Angry0

Dead0

Wink0

![bring-back-unique-smartphones-[video]](https://betadroid.in/wp-content/uploads/2025/05/22489-bring-back-unique-smartphones-video-280x210.jpg)

Leave a Reply

View Comments