Contents

Ryan Haines / Android Authority

My frustrating experiences with the pocket-sized, hype-fueled Rabbit R1 are well-known at this point. From letting it struggle to guide me through a long day to attempting to teach it new tricks, it’s let me down each time I’ve given it a chance. And yet, I’m still intrigued by the idea of an AI-powered model that can learn my intentions and do simple tasks for me. I’d love to give a voice command and have a Large Action Model call me a ride to the train station or order my morning coffee.

So, when Motorola announced a LAM of its own at Lenovo Tech World, I was eager to see it in action. I wanted to know if it felt more upfront and trustworthy than the Leuchtorange-colored R1 gathering dust on my shelf. It only took a few months for me to get my chance, and here’s how Motorola won me over right off the bat.

Cards on the table, please

Ryan Haines / Android Authority

Here’s the deal — I got to check out Motorola’s LAM in action at the tail end of CES 2025, so you’ll have to put up with a gambling reference or two. And now that we’re on the same page, let me walk you through the demo I got to experience.

Motorola’s LAM experience started as simply as anything else on the Razr Plus (2024): I watched a Motorola employee open her flip phone and tap on an app. This time, she wasn’t tapping on something like Uber or the Starbucks app; she was opening Moto AI. For now, that’s where Motorola’s LAM lives — in its own dedicated app where developers can slowly but steadily fine-tune its training.

Once there, she asked her Razr Plus to order her a “coffee Americano.” She didn’t ask for a specific size or specify where she wanted it from — just a drink to be ready for pickup. And as soon as she finished her command, the LAM sprang into action. It jumped from the Moto AI interface to the Starbucks app and set about placing her order for pickup at the nearest location (The Venetian lobby just a few hundred feet away). She, of course, canceled the order before it could go through, as nobody needs a venti Americano every time they do a LAM demo, not even at CES.

I can watch Motorola’s LAM order me a coffee or call me a ride. That’s better than trusting it to work behind the scenes.

Her point was made: Motorola’s LAM works quickly and keeps its cards on the table. Rather than logging into services like Doordash and Spotify via a sketchy backdoor virtual machine in the Rabbithole, you simply download apps to your phone and do a little teaching along the way. If you’re constantly opting for Uber XLs or choosing Comfort Electric rides, Motorola’s model will learn that after a few tries and emulate your preferences.

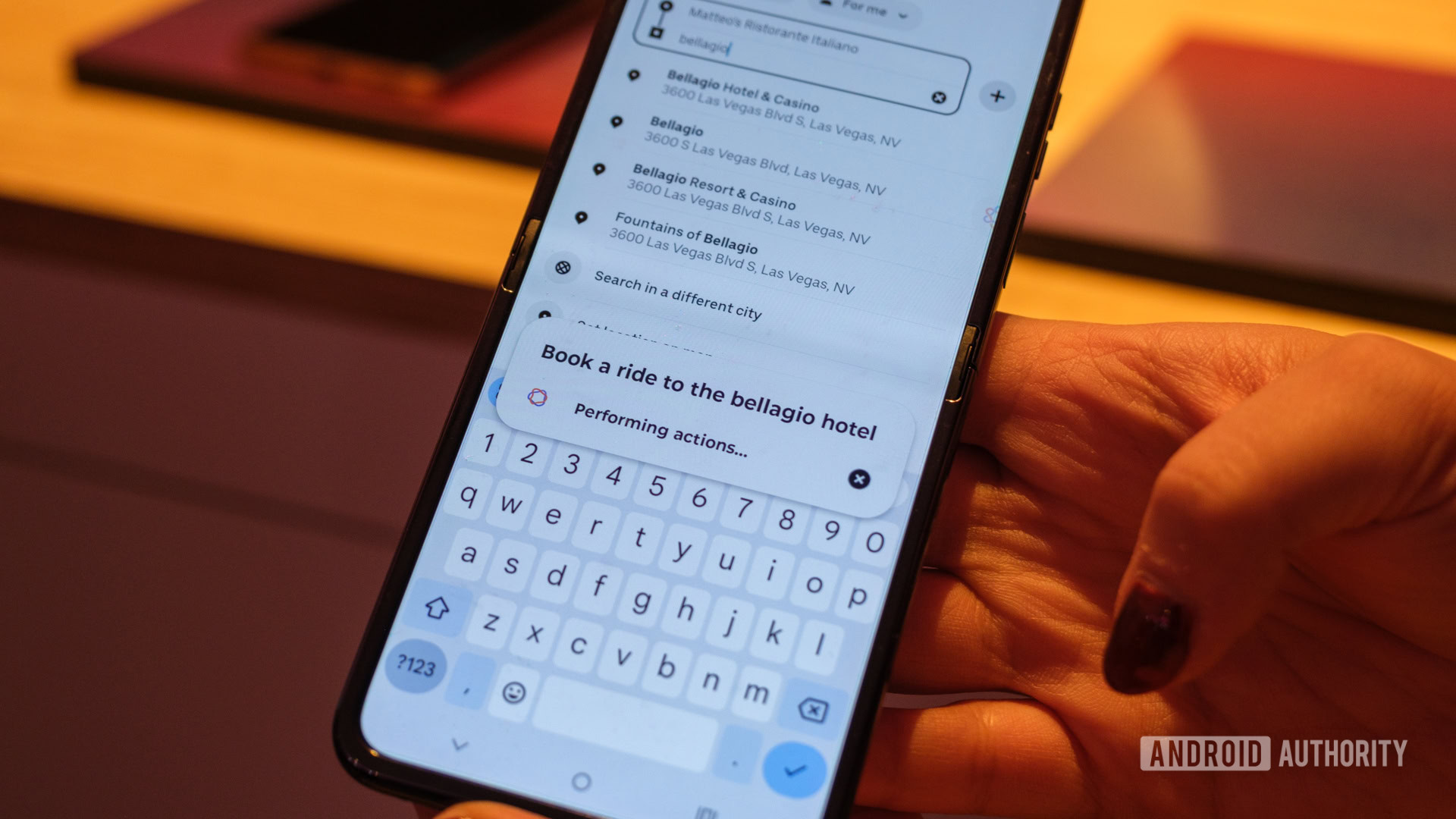

More importantly, Motorola’s model didn’t feel like it was gambling. I watched the Motorola employee frantically try to cancel an Uber ride to the Bellagio simply because it picked out her ride and found her a driver faster than I’ve ever seen a ride secured in Las Vegas. It didn’t brush her off with an excuse about virtual rabbits being too tired or force her to use a little scroll wheel to make her choices; the LAM just paid out its winnings because it knew what she wanted.

Would you trust a Large Action Model (LAM) on your smartphone?

10 votes

Here’s why I’m betting on Motorola

Ryan Haines / Android Authority

As you can probably tell, Motorola’s LAM proved more impressive to me at every turn. Do I love that it still takes over the entire display of the Razr Plus (2024) to “perform actions,” as Motorola puts it? No, not really — I’d love to see it graduate to run in the background so I can continue to use my phone in the meantime, but it’s a start. Besides, by watching the model open Uber and hail a ride, I at least know that it’s doing what I want and how I want it done.

Perhaps what gives me confidence in the usefulness of Motorola’s LAM is that, well, it’s kind of an app. Rabbit made a big fuss about how its Rabbit OS is decidedly not an app and can, therefore, be more beneficial than an app — at least until it was proven to be an app. And with Rabbit’s not-an-app-app, the limitations were immediately apparent. It could only work with a short list of virtual machine-based integrations (Doordash, Spotify, and others). Otherwise, it would run through a variation on an OpenAI model when asked to search for an answer.

Motorola’s LAM uses the apps on your phone, which feels much safer than a virtual back-end environment.

With Motorola’s model, however, it still feels like the door is wide open. Yes, the examples of Uber and Starbucks I was shown were very simple and specific, but it felt like the LAM was in control from start to finish. I didn’t have to check in and guide it along the way; it simply learned the procedure over time and replicated it — no complicated Teach Mode or accident-prone LAM Playground required. And, because it uses the apps already on your phone, it feels much more likely that Motorola’s model will grow and expand to include ordering from Amazon, mapping out restaurants in Google Maps, and other things that I might be too lazy to do.

Of course, there are still a few things that I’ll have to wait on when it comes to Motorola’s AI-powered LAM. For starters, I’m yet to find out how the training process actually works. Although I saw the model run through some basic tasks, the Motorola team was a bit tighter-lipped on how the LAM (which is currently still in beta testing) would go about expanding its skillset. And yet, I’m willing to take that risk if it means that my primary device — not a secondary companion — can actually serve as the AI companion that Rabbit thought it was selling me.

What’s your reaction?

Love0

Sad0

Happy0

Sleepy0

Angry0

Dead0

Wink0

Leave a Reply

View Comments