Contents

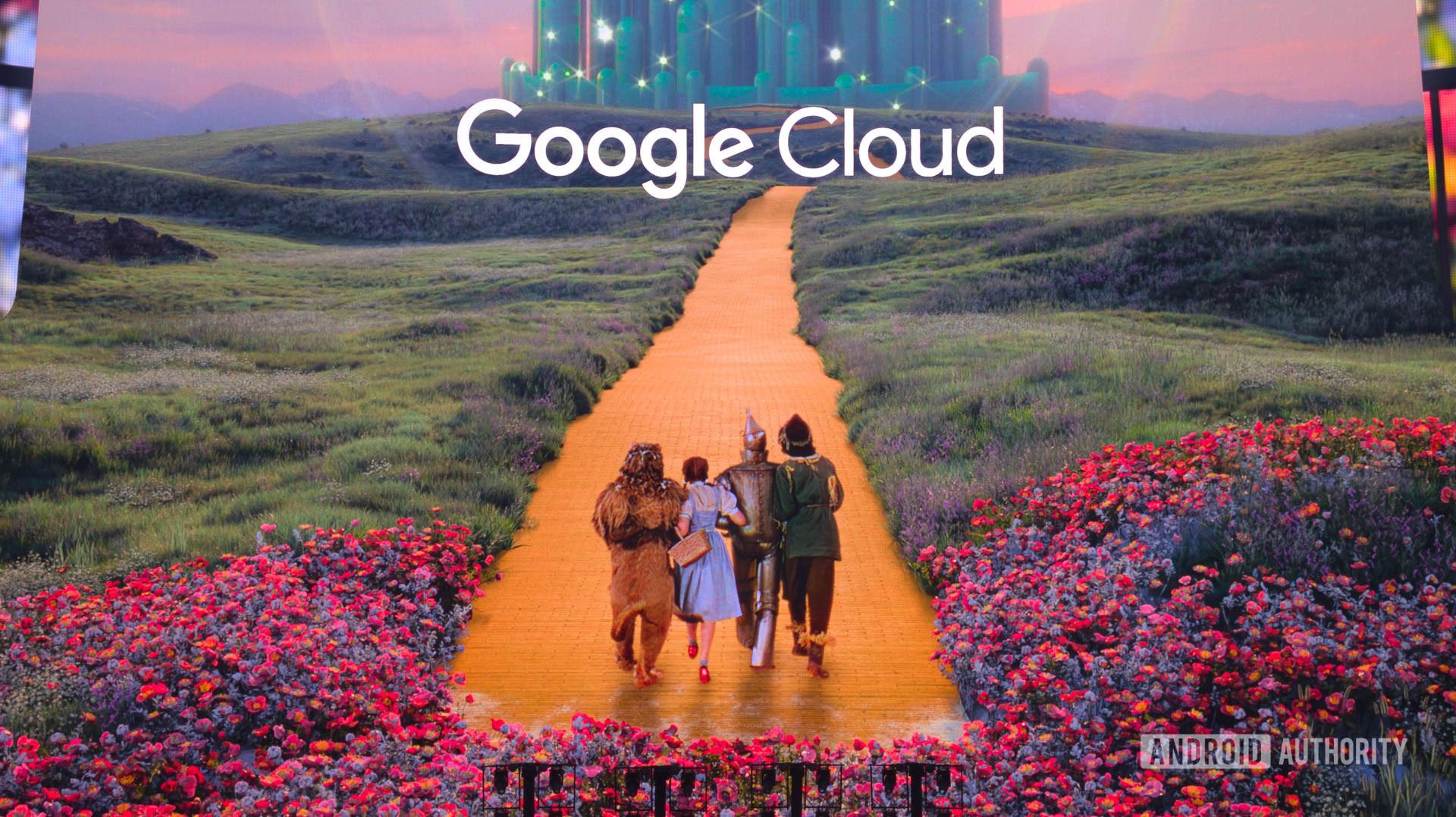

Over the past two years, Google has been working on using generative AI to upscale and augment The Wizard of Oz, the beloved 1939 film that has been a touchstone for generations. Google DeepMind and Google Cloud — along with Warner Bros. Discovery and Magnopus — are doing this work to show the movie in its entirety at Sphere, the iconic spherical theater in Las Vegas.

Today, I was in the audience to see the first look at what Oz will be like there when it starts playing on August 28, 2025. The event helped kick off Google Cloud Next 2025, which Google kindly invited me to.

I’ve got a lot to say about this, so I’m going to jump right in. First, I want to tell you what is going on with Oz. Then, I want to tell you what the two sides of my brain are saying about this: the tech geek side and the film geek side. Trust me, each side has very different opinions about this endeavor.

The Wizard of Oz, Google, and Sphere: What’s happening?

A few days ago, we learned that The Wizard of Oz was coming to Sphere, along with a new film from the creative team behind Free Solo, the Oscar-winning 2018 documentary about the first free solo climb of El Capitan. However, the initial announcement left out a crucial detail: The Wizard of Oz shown at Sphere will not be the film you’ve seen before.

Sphere’s interior houses a theater with a 16K (16,000 pixels on each side) screen that wraps over the audience’s heads. Additionally, there’s theater lighting throughout the venue, giant wind machines at the front, and haptic motors built into the seats, allowing for a fully immersive experience that’s been used to great effect with films, live concerts, and more.

The Wizard of Oz, as it has been for over 80 years, won’t work showing at Sphere, so Google is using AI to alter it.

To show Oz as it is in something like Sphere makes little sense. The film is over 80 years old. It did get a 4K HDR restoration in 2020, but even then, a movie with a 4:3 aspect ratio made to be shown on conventional screens would look silly blown up to the proportions needed for Sphere’s unique layout. Just imagine a blurry close-up of Judy Garland’s face at a height of six stories and only partially visible because her forehead is behind you, and her chin is in front of you. Scary stuff.

This is where Google comes in. Using the power of DeepMind on Google Cloud’s massive array of servers, an enormous team of engineers and researchers have been using AI to both upscale and augment Oz. The intention is to make the classic film look crystal clear at 16K and use generative AI to augment shots so they will work better on the concave screen.

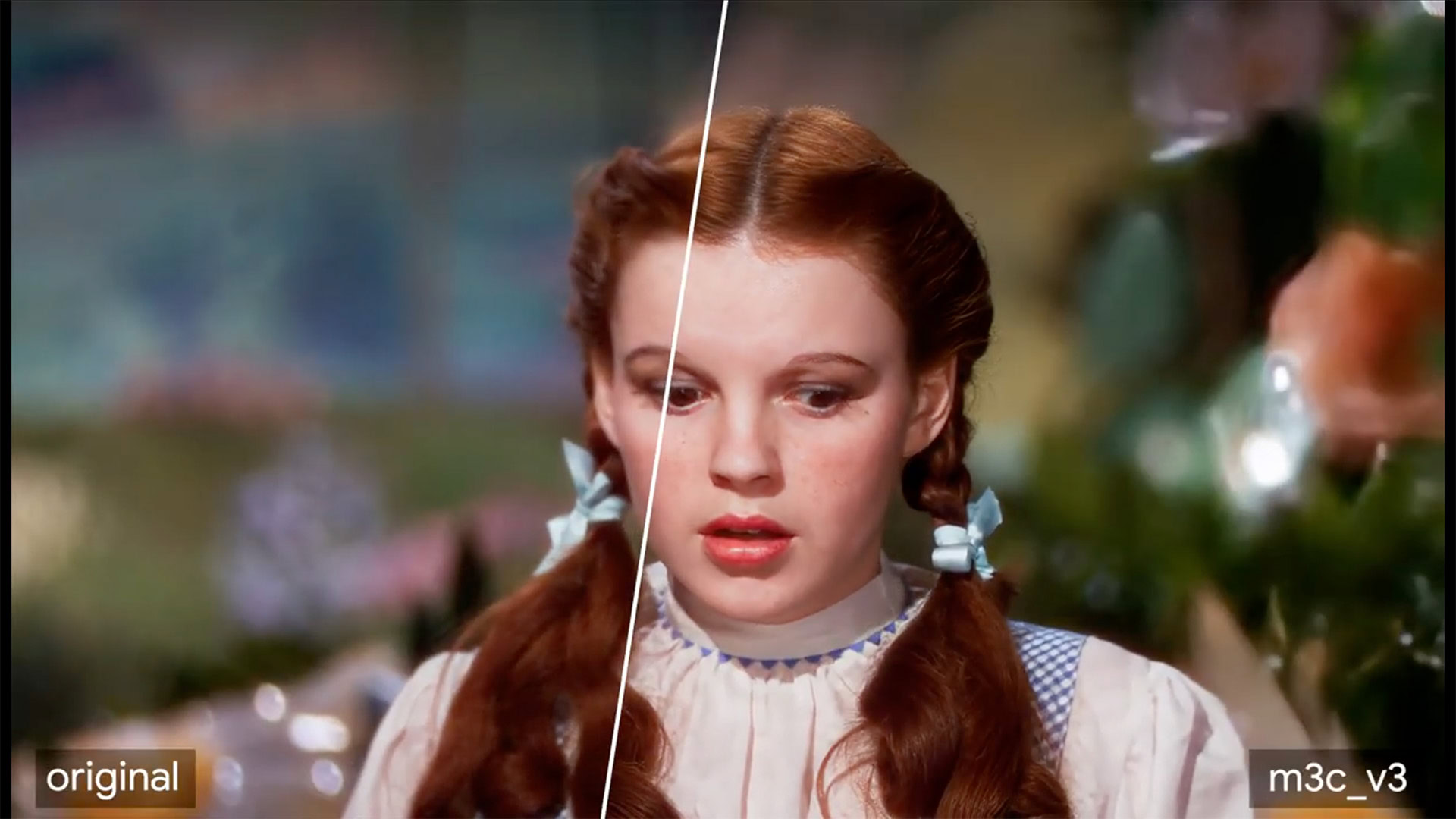

A picture is worth a thousand words, so check this out to see what Google needs to overcome:

C. Scott Brown / Android Authority

During today’s presentation, Google showed a few classic scenes from Oz that appeared to be how they will look when it plays in full at the end of August. We saw the full rendition of Dorothy singing “Over the Rainbow,” the scene where the Wicked Witch of the West turns over the hourglass of red sand as she taunts Dorothy, and the terrifying introduction of Dorothy and her friends to the Wizard of Oz’s fiery chamber. Viewed solely from a technical perspective, it was absolutely jaw-dropping. This is where I’m going to let my inner tech geek run free.

Tech geek perspective: A gargantuan AI-powered marvel

C. Scott Brown / Android Authority

The first part of Google’s work on this film involved upscaling it to 16K. This required technology that had never existed before. You can see the heightened level of detail Google was going for in the composite shot below:

While upscaling The Wizard of Oz was an ordeal in itself, making the film work at Sphere requires going even further: completely re-making it. The movie is filled with close-ups of people’s faces talking, singing, screaming, sniveling, etc. Every single one of those shots needs to be “zoomed out” so that the character is in the center of the frame, but there is more going on around them to fill in the parts of Sphere’s screen that you can’t see unless you tilt your head back.

Doing this requires “outpainting.” This is when you take a photo (or video, in this case) and add more information to it to make it larger without changing the proportions of actors, props, etc.

Check out these two GIFs to see what I mean. The one on the left is the tornado scene in Oz as it was originally shot, and the one on the right is an outpainted scene with more information added in by AI:

This is a relatively simple example. Most of Judy Garland’s body is in the shot, and there are no other actors involved, so all the AI needs to do is add more land, more debris, and Garland’s legs, which isn’t too tricky considering how much information it already has from the original shot.

From what I’ve seen so far, Google is doing an incredible job overcoming the technical challenges of this project. It’s astounding, really.

Where things get really difficult is when AI needs to add in a more significant portion of a person’s body or sometimes even entire actors that weren’t originally there. Although Google only showed us glimpses of these kinds of shots, you can use your imagination for one of the most famous scenes in the movie: the ending, where Dorothy is explaining her adventures to her family and friends (“And you were there, and you were there!”). A shot just on Dorothy’s face during this scene won’t work at Sphere, so Google needs to outpaint it. But, if it uses outpainting for that shot, it will involve bringing in the other characters surrounding Dorothy in bed. In this situation, the AI will need to understand which characters are there, where they are positioned, what they look like, what they would be doing, how they would move, how they would speak, and on and on. It is incredibly difficult to do — or at least difficult to do well enough so that it won’t look preposterous.

As I said, we only got glimpses of this at the presentation I attended, but what I saw looked very impressive. Strictly from a technical viewpoint, Google is doing amazing things here. The fact that it’s done this in about two years is a testament to just how fast generative AI is moving.

From a film appreciation standpoint, though, things are a little murkier.

Film geek perspective: Did we really need an AI remake of Oz?

C. Scott Brown / Android Authority

If you’re worried that I will be a film purist and tell you that AI has no place touching movies — especially ones as sacrosanct as The Wizard of Oz — don’t worry because I won’t do that. I look at what Google is doing here as not much different than those immersive artwork shows you see at museums nowadays. You know, the ones where you can “step inside” a virtual recreation of “Starry Night” by Van Gogh. It’s not the same thing as viewing the actual painting, but instead, a new way to experience that art. This new version of Oz is similar.

Google was so preoccupied with whether or not it could that it didn’t stop to think if it should.

However, I will say that what I saw at Sphere today didn’t look like The Wizard of Oz. Take the “Over the Rainbow” sequence. Judy Garland didn’t look like a real person. She looked like she was waxy, which is a problem we see a lot when AI tries to replicate humans or upscale old images. Her hair looked like a lump of solid plastic at certain points, too. To try to show you this, I shot the photo you see above from my seat and cropped it into the image below:

C. Scott Brown / Android Authority

Even if you can ignore the weird, uncanny valley stuff AI does to shots like the above, other shots are so different they’re barely the same thing anymore. The scene where Dorothy and the gang first meet the Wizard of Oz is also wholly different. Check out how they compare below:

This touches on the whole problem with this endeavor, which is that what you’ll see at Sphere in August is not The Wizard of Oz but an algorithmic interpretation of the film. Obviously, Google didn’t just feed the movie to Gemini and then say, “Make this 16K and look good at Sphere.” It has spent years refining it to make it the best it can possibly be. But no matter how long it has spent (and continues to spend) tweaking it, the final product is still the same: It’s an AI remake of one of the most beloved and influential films of all time. Do we really need that?

I am also incredibly nervous about the parts of the film where AI generates entire characters. In the presentation, the Google/Magnopus teams talk about outpainting scenes with Dorothy, the Scarecrow, the Cowardly Lion, and the Tin Man. In those situations, AI must decide what off-screen characters are doing in shots they were never in. In a sense, Google is now directing new footage for the film and jamming it into the original. I haven’t seen it yet, so I will reserve my judgment, but I can’t deny that I’m very, very nervous about how that might turn out. There are people worldwide who hold those characters very near and dear, and if this reimagined version of the film messes with that in some way, it will upset them.

Ultimately, though, I sincerely hope this goes well. The immersive Van Gogh events have made Van Gogh’s art more popular than ever and introduced his work to a new generation. Maybe this AI-augmented Wizard of Oz will have a similar effect on young people who might not be excited about watching an 86-year-old movie at home but will be excited about watching it at Sphere with shaking seats, wind machines, and the like. The Wizard of Oz, after all, has inspired some of the greatest filmmakers of our time, so maybe this will be a chance for it to continue to do that for generations to come.

What’s your reaction?

Love0

Sad0

Happy0

Sleepy0

Angry0

Dead0

Wink0

Leave a Reply

View Comments