Edgar Cervantes / Android Authority

TL;DR

- Google is expanding access to Gemini Nano, its on-device AI model, through new ML Kit GenAI APIs.

- These new APIs, likely to be announced at I/O 2025, will enable developers to easily implement features like text summarization, proofreading, rewriting, and image description generation in their apps.

- Unlike the experimental AI Edge SDK, ML Kit’s GenAI APIs will be in beta, support image input, and be available on a wider range of Android devices beyond the Pixel 9 series.

Generative AI technology is changing how we communicate and create content online. Many people ask AI chatbots like Google Gemini to perform tasks such as summarizing an article, proofreading an email, or rewriting a message. However, some people are wary of using these AI chatbots, especially when these tasks involve highly personal or sensitive information. To address these privacy concerns, Google offers Gemini Nano, a smaller, more optimized version of its AI model that runs directly on the device instead of on a cloud server. While access to Gemini Nano has so far been limited to a single device line and text-only input, Google will soon significantly expand its availability and introduce image input support.

Late last month, Google published the session list for I/O 2025, which includes a session titled, “Gemini Nano on Android: Building with on-device gen AI.” The session’s description states it will “introduce a new set of generative AI APIs that harness the power of Gemini Nano. These new APIs make it easy to implement use cases to summarize, proofread, and rewrite text, as well as to generate image descriptions.”

In October, Google opened up experimental access to Gemini Nano via the AI Edge SDK, allowing third-party developers to experiment with text-to-text prompts on the Pixel 9 series. The AI Edge SDK enables text-based features like rephrasing, smart replies, proofreading, and summarization, but it notably does not include support for generating image descriptions, a feature Google highlighted for the upcoming I/O session. Thus, it’s likely that the “new set of generative AI APIs” mentioned in the session’s description refers to either something entirely different from the AI Edge SDK or a newer version of it. Fortunately, we don’t have to wait until next week to find out.

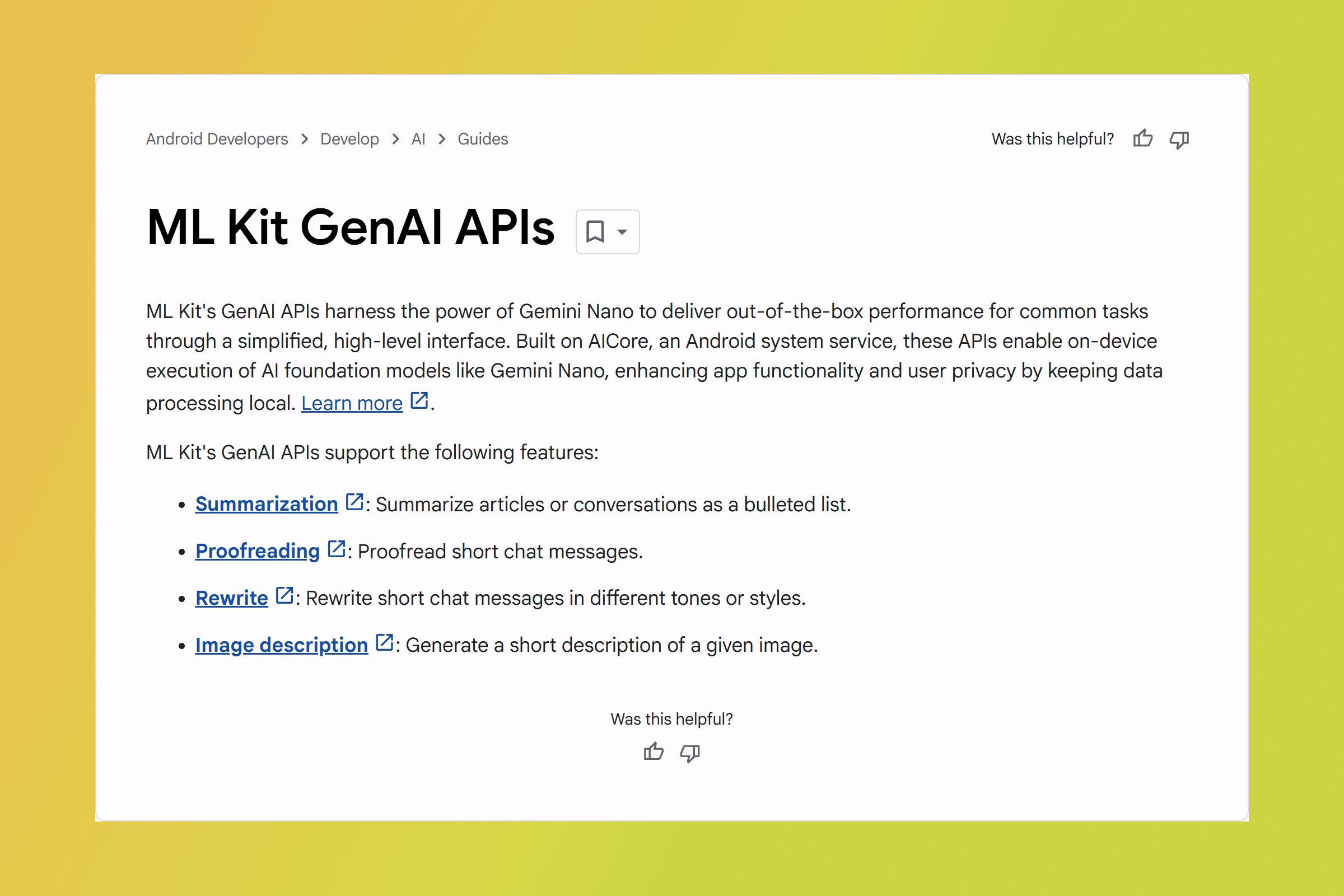

Earlier this week, Google quietly published documentation on ML Kit’s new GenAI APIs. ML Kit is an SDK that allows developers to leverage machine learning capabilities in their apps without needing to understand how the underlying models work. The new GenAI APIs allow developers to “harness the power of Gemini Nano to deliver out-of-the-box performance for common tasks through a simplified, high-level interface.” Like the AI Edge SDK, it’s “built on AICore,” enabling “on-device execution of AI foundation models like Gemini Nano, enhancing app functionality and user privacy by keeping data processing local.

Mishaal Rahman / Android Authority

In other words, ML Kit’s GenAI APIs make it simple for developers to use Gemini Nano for various features in their apps privately and with high performance. These features currently include summarizing, proofreading, or rewriting text, as well as generating image descriptions. All four of these features match what’s mentioned in the I/O session’s description, suggesting that Google intends to formally announce ML Kit’s GenAI APIs next week.

Here’s a summary of all the features offered by ML Kit’s GenAI APIs:

- Summarization: Summarize articles or chat conversations as a bulleted list.

- Generates up to three bullet points

- Languages: English, Japanese, and Korean

- Proofreading: Polish short content by refining grammar and fixing spelling errors.

- Languages: English, Japanese, German, French, Italian, Spanish, and Korean

- Rewrite: Rewrite short chat messages in different tones or styles.

- Styles: Elaborate, Emojify, Shorten, Friendly, Professional, Rephrase

- Languages: English, Japanese, German, French, Italian, Spanish, and Korean

- Image description: Generate a short description of a given image.

- Languages: English

Compared to the existing AI Edge SDK, ML Kit’s GenAI APIs will be offered in “beta” instead of “experimental access.” This ‘beta’ designation could mean Google will allow apps to use the new GenAI APIs in production. Currently, developers cannot release apps using the AI Edge SDK, meaning no third-party apps can leverage Gemini Nano at this time. Another difference is that the AI Edge SDK is limited to text input, whereas ML Kit’s GenAI APIs support images. This image support enables the image description feature, allowing apps to generate short descriptions of any given image.

The biggest difference between the current version of the AI Edge SDK and ML Kit’s GenAI APIs, though, is device support. While the AI Edge SDK only supports the Google Pixel 9 series, ML Kit’s GenAI APIs can be used on any Android phone that supports the multimodal Gemini Nano model. This includes devices like the HONOR Magic 7, Motorola Razr 60 Ultra, OnePlus 13, Samsung Galaxy S25, Xiaomi 15, and more.

Developers who are interested in trying out Gemini Nano in their apps can get started by reading the public documentation for the ML Kit GenAI APIs.

Got a tip? Talk to us! Email our staff at [email protected]. You can stay anonymous or get credit for the info, it’s your choice.

What’s your reaction?

Love0

Sad0

Happy0

Sleepy0

Angry0

Dead0

Wink0

![what’s-new-in-android’s-may-2025-google-system-updates-[u:-5/16]](https://betadroid.in/wp-content/uploads/2025/05/21026-whats-new-in-androids-may-2025-google-system-updates-u-5-16-280x210.jpg)

Leave a Reply

View Comments