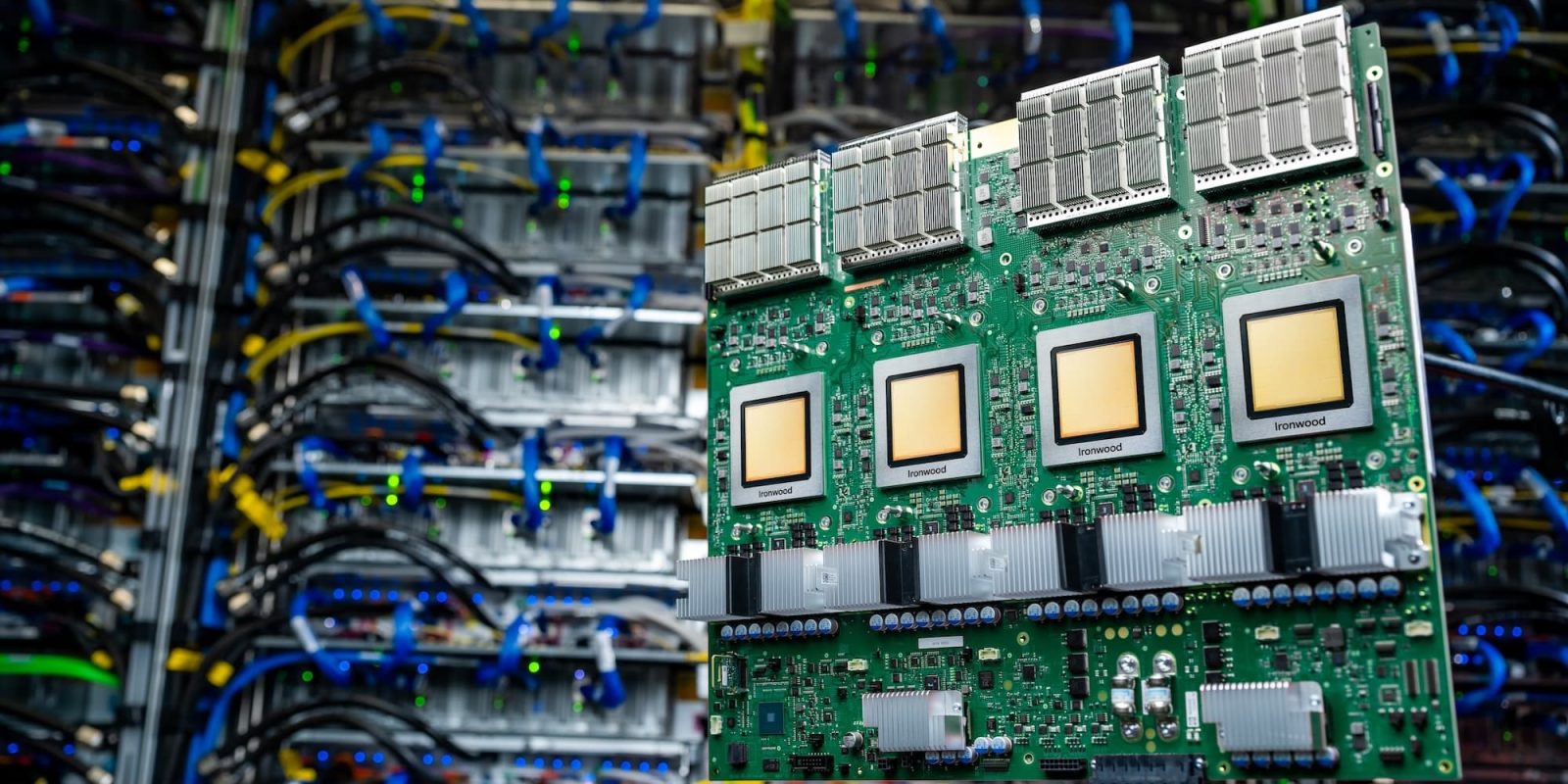

In addition to the latest in Workspace at Cloud Next 2025, Google today announced Ironwood, its 7th-generation Tensor Processing Unit (TPU) and the latest generative models.

Ironwood

The Ironwood TPU is Google’s “most performant and scalable custom AI accelerator to date,” as well as energy efficient, and the “first designed specifically for inference.” Specifically:

Ironwood represents a significant shift in the development of AI and the infrastructure that powers its progress. It’s a move from responsive AI models that provide real-time information for people to interpret, to models that provide the proactive generation of insights and interpretation. This is what we call the “age of inference” where AI agents will proactively retrieve and generate data to collaboratively deliver insights and answers, not just data.

Ironwood is designed to manage the demands of thinking models, which “encompass Large Language Models (LLMs), Mixture of Experts (MoEs) and advanced reasoning tasks,” that require “massive” parallel processing and efficient memory access. The latter is achieved by minimizing “data movement and latency on chip while carrying out massive tensor manipulations.”

At the frontier, the computation demands of thinking models extend well beyond the capacity of any single chip. We designed Ironwood TPUs with a low-latency, high bandwidth ICI network to support coordinated, synchronous communication at full TPU pod scale.

Google Cloud customers can access a 256 or 9,216-chip — each individual chip offers peak compute of 4,614 TFLOPs — configuration. The latter is a pod that has a total of 42.5 Exaflops or: “more than 24x the computer power of the world’s largest supercomputer – El Capitan – which offers just 1.7 Exaflops per pod.”

Ironwood offers performance per watt that is 2x relative to the 6th-gen Trillium announced in 2024, as well as 192 GB of High Bandwidth Memory per chip (6x Trillium).

Pathways is Google’s distributed runtime that powers internal large-scale training and inference infrastructure. It’s now available for Google Cloud customers.

Gemini 2.5 Flash

Gemini 2.5 Flash is Google’s “workhorse model” where low latency and cost are prioritized. Coming soon to Vertex AI, it features “dynamic and controllable reasoning.”

The model automatically adjusts processing time (‘thinking budget’) based on query complexity, enabling faster answers for simple requests. You also gain granular control over this budget, allowing explicit tuning of the speed, accuracy, and cost balance for your specific needs. This flexibility is key to optimizing Flash performance in high-volume, cost-sensitive applications

Example high-volume use cases include customer service and real-time information processing.

Gen AI models

Google is now making its Lyria text-to-music model available for enterprise customers “in preview with allowlist” on Vertex AI. This model can generate high-fidelity audio across a range of genres. Companies can use it to quickly create soundtracks that are tailored to a “brand’s unique identity.” Another use is for video production and podcasting:

Lyria eliminates these hurdles, allowing you to generate custom music tracks in minutes, directly aligning with your content’s mood, pacing, and narrative. This can help accelerate production workflows and reduce licensing costs.

The following is an example prompt: “Craft a high-octane bebop tune. Prioritize dizzying saxophone and trumpet solos, trading complex phrases at lightning speed. The piano should provide percussive, chordal accompaniment, with walking bass and rapid-fire drums driving the frenetic energy. The tone should be exhilarating, and intense. Capture the feeling of a late-night, smoky jazz club, showcasing virtuosity and improvisation. The listener should not be able to sit still.”

Meanwhile, Veo 2 is getting editing capabilities that let you alter existing footage:

- Inpainting: Get clean, professional edits without manual retouching. You can remove unwanted background images, logos, or distractions from your videos, making them disappear smoothly and perfectly in every single frame, so it looks like they were never there.

- Outpainting: Extend the frame of existing video footage, transforming traditional video into optimized formats for web and mobile platforms. This helps make it easy to adapt your content for various screen sizes and aspect ratios – for example, converting landscape video to portrait for social media shorts.

Similarly, Imagen 3 Editing features improvements to inpainting “for reconstructing missing or damaged portions of an image,” as well as objects removal.

Chirp 3 is Google’s audio understanding and generation model. It offers “HD voices” with natural and realistic speech in 35+ languages with eight speaker options. The understanding aspect powers a new feature that “accurately separates and identifies individual speakers in multi-speaker recordings” for better transcription.

Another new feature lets Chirp 3 “generate realistic custom voices from 10 seconds of audio input.”

This enables enterprises to personalize call centers, develop accessible content, and establish unique brand voices—all while maintaining a consistent brand identity. To ensure responsible use, Instant Custom Voice includes built-in safety features, and our allowlisting process involves rigorous diligence to verify proper voice usage permissions.

On the safety front, “DeepMind’s SynthID embeds invisible watermarks into every image, video and audio frame that Imagen, Veo, and Lyria produce.”

Add 9to5Google to your Google News feed.

FTC: We use income earning auto affiliate links. More.

Leave a Reply