Contents

Robert Triggs / Android Authority

It’s safe to say that AI is permeating all aspects of computing. From deep integration into smartphones to CoPilot in your favorite apps — and, of course, the obvious giant in the room, ChatGPT. However, working with AI, especially as a journalist, raises a lot of concerns. For one, it has been trained on the work — creative or otherwise — of others. More importantly, it learns from every prompt or input you send its way. So, I’ve been dabbling with hosting my own LLM for the last few months.

Running your own LLM is free and gives you full control.

AI has shifted from being a futuristic concept to an everyday tool. Its role in creative industries, productivity apps, and customer interactions is undeniable. But amid this surge, it’s easy to forget the importance of ownership. Who owns your queries? Who controls your insights? Running a local LLM allows you to answer those questions decisively — you do.

There’s something powerful about knowing your AI lives on your own machine — no subscriptions, no cloud dependency, and no oversight. Running LLMs locally has given me control, privacy, and a rebellious sense of independence. From Meta’s Llama model to the latest DeepSeek model, I’ve found that this setup makes all the difference in turning AI from a tool to an everyday productivity partner. Here’s why I’ve been running a local LLM, and how you can too.

Are you interested in DeepSeek’s AI model?

1880 votes

Privacy isn’t a negotiable

Calvin Wankhede / Android Authority

Every time I use a cloud-based AI, I’m handing over my thoughts, questions, and sometimes sensitive data to a third party. Whether I’m drafting internal business emails, brainstorming ideas for clients, or even journaling personal reflections, the idea of those words being stored on a distant server — or worse, analyzed for training — makes me uneasy.

With DeepSeek running on my own machine, my data stays where it belongs: under my control. This is essential to me, both for personal and professional reasons. While I’m not a fan of any organization reading my private journal entries, this is even more important when I’m dealing with privileged information.

Constantly learning LLMs cannot be trusted with private, privileged information. A self-hosted LLM gets around that.

As a journalist and business owner, I’m often privy to embargoed information, and I cannot risk entering that data into an AI model that will use it for training. Even seemingly innocuous prompts can contain valuable intellectual property that you wouldn’t want shared or analyzed. This is especially relevant for those working in finance, law, or creative industries.

A local LLM solves that issue by guaranteeing that your data stays only on your machine. Additionally, a local LLM isn’t held to the same censorship standards as the web version. For example, the web version of Deepseek actively censors information about Chinese politics, and other LLMs are known to show political bias. An open-source self-hosted AI model isn’t necessarily beholden to those restrictions.

Offline access

My other reason for using an offline LLM is a no-brainer. ChatGPT simply won’t work without solid internet connectivity or while taking a long flight. Meanwhile, my offline Llama and DeepSeek models run perfectly fine even on my MacBook Air.

I’ve drafted outlines for blog posts, parsed data-heavy spreadsheets, and cleaned up code while flying 30,000 feet in the air. This simply wouldn’t be possible with a conventional AI tool. A local LLM that is persistently available completely transforms the experience and makes it a reliable, always-available AI buddy.

Subscription fatigue

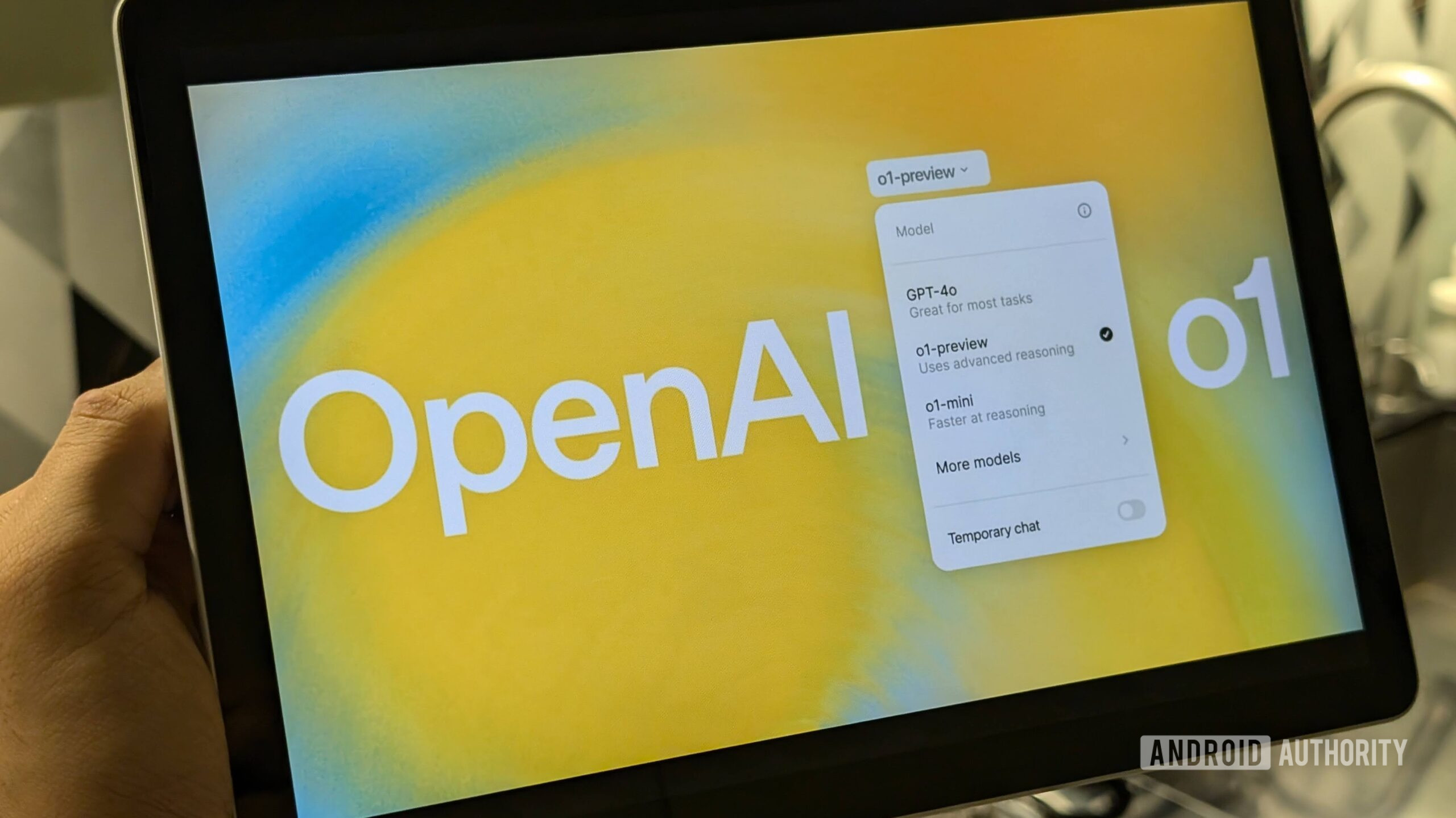

Between Netflix, various cloud streaming and storage services, productivity apps, and now AI services — the recurring cost of subscriptions quickly adds up. And unless you’re getting serious productivity out of it, the $20 per month that ChatGPT costs is overkill.

An open-source LLM is capable enough for most everyday tasks without a monthly subscription fee attached to it.

For my purposes — outlining journal entries, grammar checks, and minor code corrections — running my own LLM makes perfect sense. Even the eight billion parameter DeepSeek model I’m running right now is perfectly capable of those tasks. Would I benefit from a full-fledged model? Sure. But neither the subscription cost nor the hassle of setting up a cluster to run it is worth the minor benefits I would personally gain. And that’s before we get to the earlier point of offline access. Of course, your use case may vary.

Learning and development

I trained as an engineer and have an innate curiosity to learn how tech works. Predictably, I find LLMs incredibly fascinating. Loading up my own LLM locally gives me a unique opportunity to learn how it works — especially with DeepSeek, which exposes its internal dialogue when making rational, and sometimes irrational, decisions.

For instance, I recently trained a smaller subset model to help analyze logs from my smart home equipment. Watching the AI learn and refine its responses was both fascinating and rewarding. Beyond professional applications, understanding the mechanics of LLMs has made me more thoughtful about the ethical implications of AI.

So… how do I run my own LLM?

Dhruv Bhutani / Android Authority

Let me preface this by saying that you’re going to need a pretty beefy setup to run an LLM locally, and even then, you’re looking at a quantized model. A full LLM requires hundreds of gigabytes of RAM that most users are unlikely to have. With that said, Apple’s M-series silicon is a solid bet for running local AI models.

In fact, I tested DeepSeek on an M3 MacBook Air with 16GB of RAM, and it didn’t have much trouble running the 8-billion parameter model. On the PC side of things, a GPU is more or less essential. The beefier, the better. Additionally, you’ll want as much RAM as you can throw at the LLM model. However, as a baseline, 16GB of RAM would be my recommendation as the bare minimum.

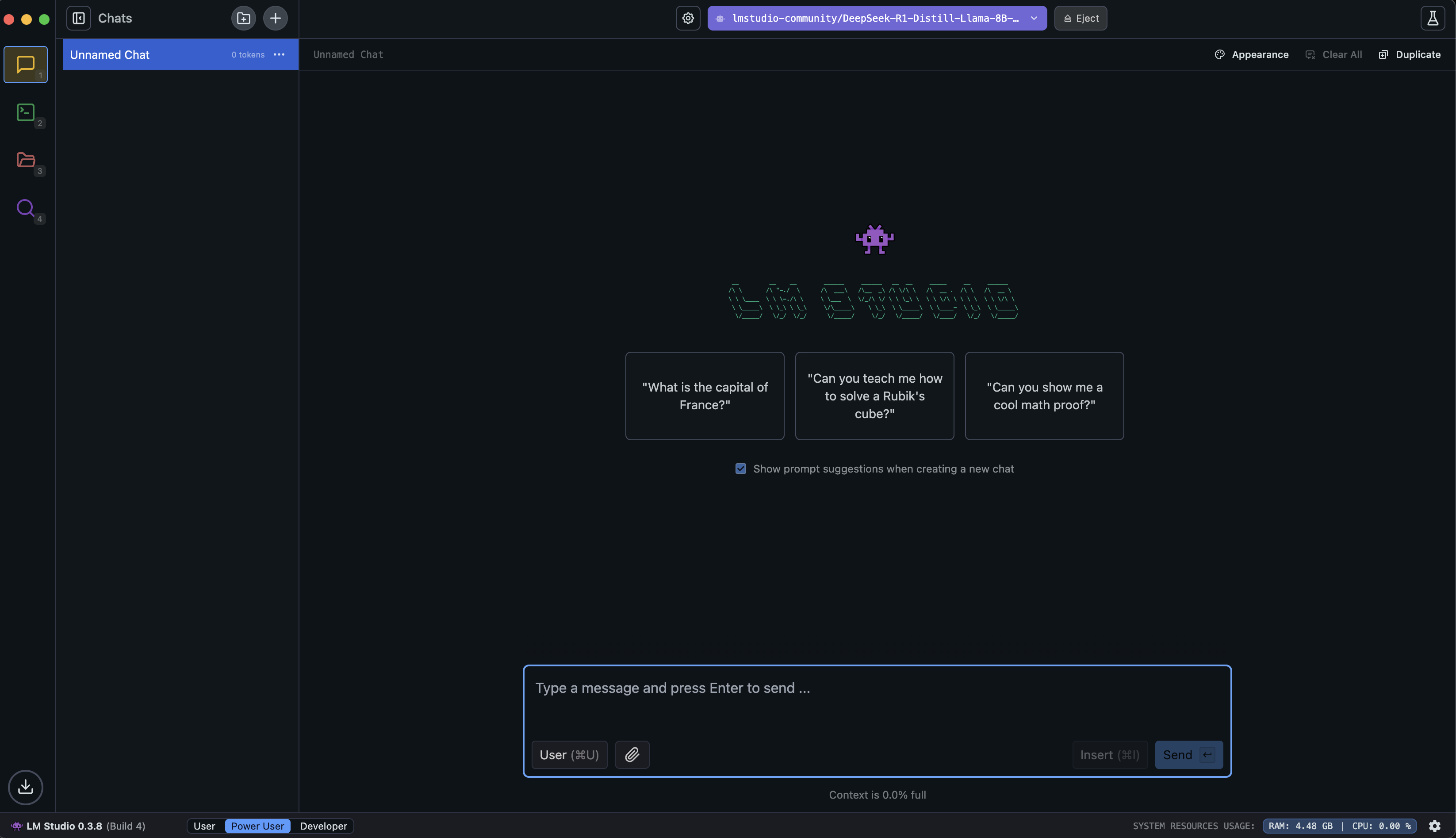

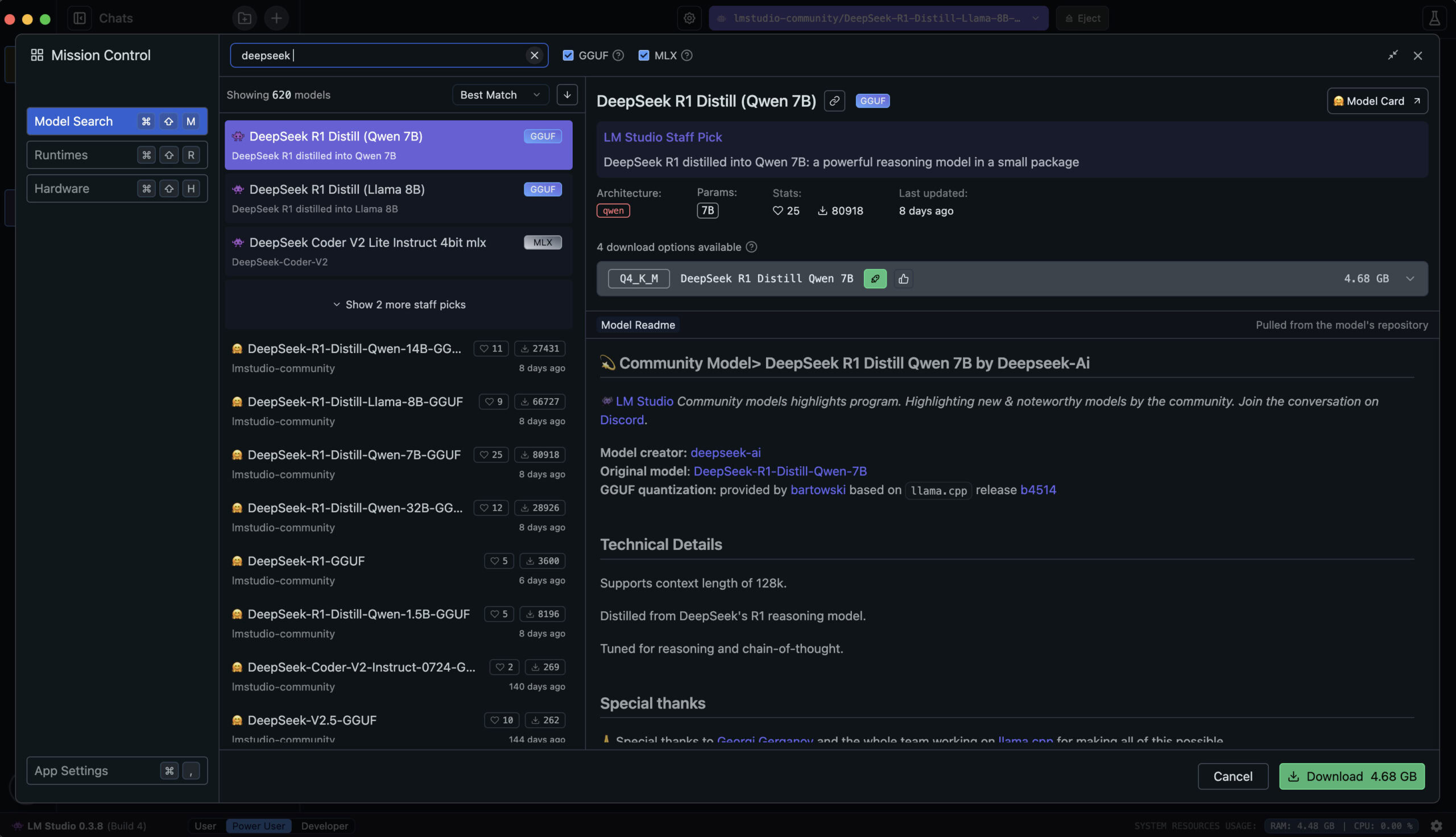

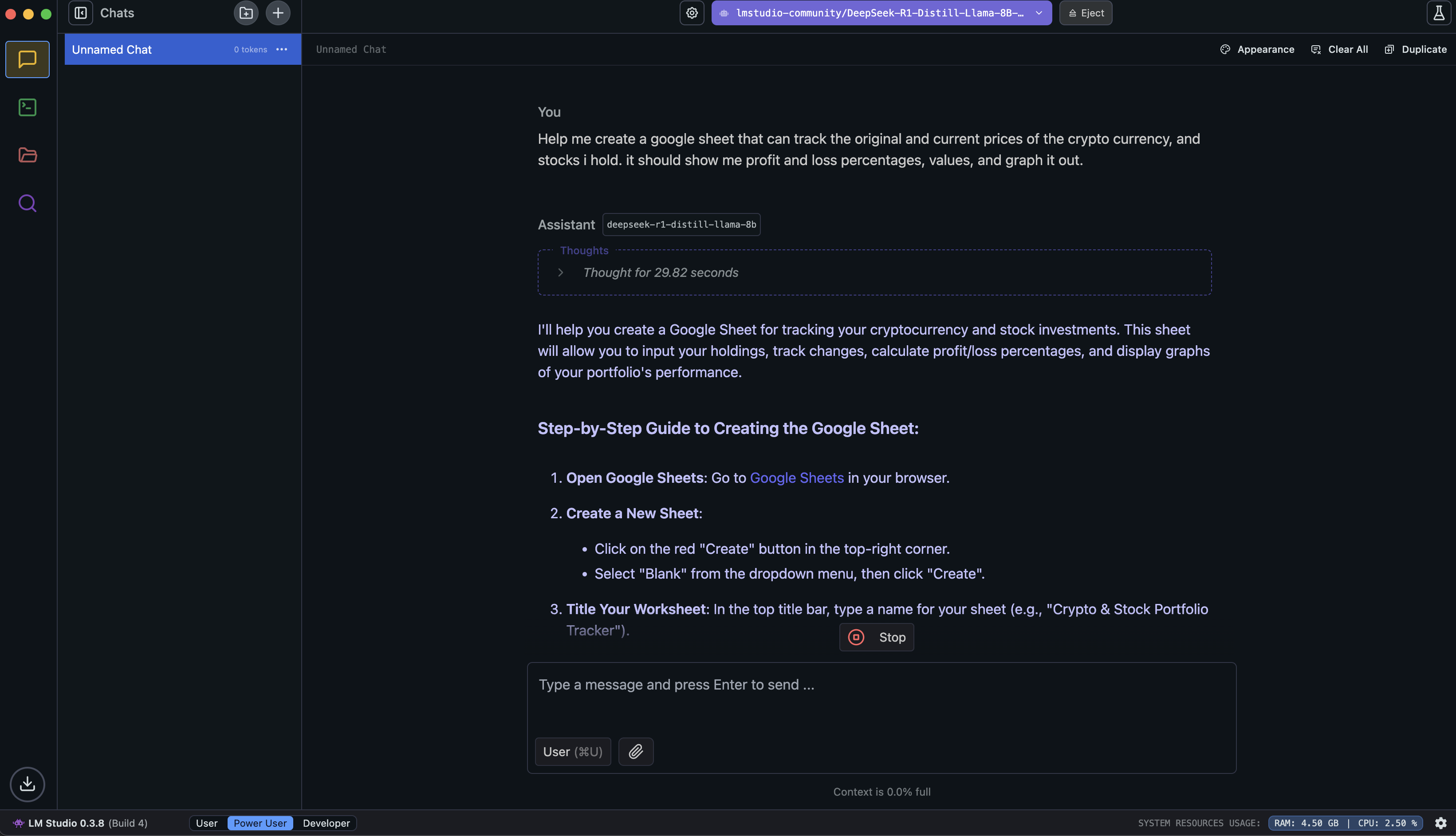

While the internet’s favorite way to run a local LLM tends to be Ollama, there’s a better option. While Ollama is a great solution for those who are used to the command line or want to pass on commands programmatically, LM Studio is my preferred way of running LLMs on my computer. One of the best things about LM Studio is that it abstracts most of the complexity. You won’t need to fiddle with dependencies or complex configurations — just download, install, and you’re good to go.

LM Studio offers an easy to use, GUI-based solution to running your own AI models.

The utility wraps up the entire process in a neat interface and presents a chat interface that’s instantly familiar to anyone who has used ChatGPT. Additionally, there’s no command line access needed.

Just head to the LM Studio website and download the file for your operating system. By default, LM Studio will offer to download the Llama model, but you can skip that.

Dhruv Bhutani / Android Authority

Instead, tap the magnifying glass icon to enter the Discover tab. LM Studio taps into the Hugging Face repository and makes it trivial to download any available large language model. Better still, it tells you if your computer can handle the model or not. Keep an eye out for quantized models, as those tend to be easier to run on less powerful computers without sacrificing too much performance.

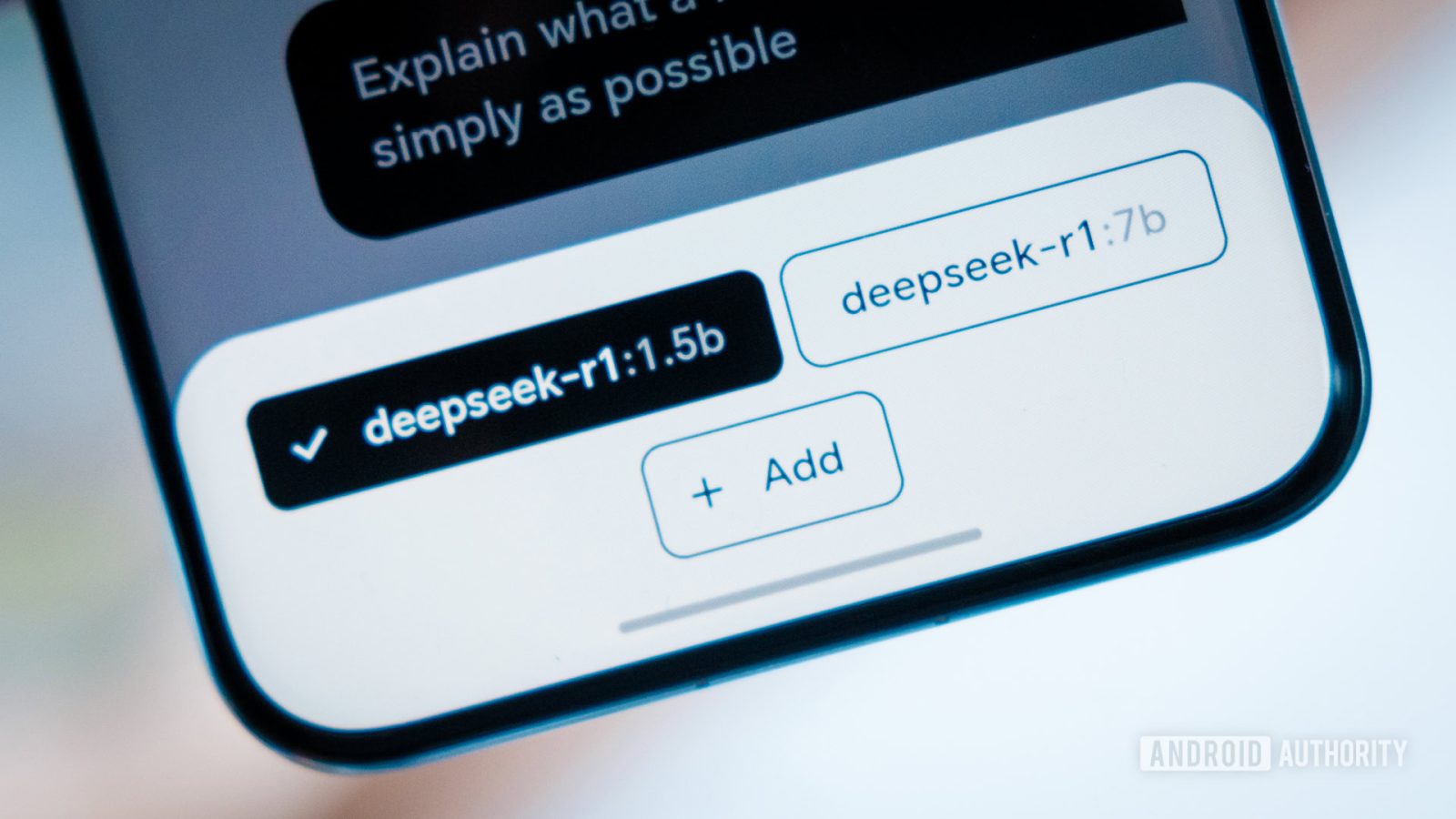

Just search for DeepSeek R1, and you’ll be presented with an option to download it. That’s it. Tap the chat button and get talking to the LLM as you would on the website. You can even swap out AI models on the fly to draw comparisons between the outputs.

Reclaiming privacy and productivity

Dhruv Bhutani / Android Authority

Running large language models locally wasn’t just a technical decision for me — it was about reclaiming control. There’s something liberating about knowing your data stays with you, your tools don’t need permission from the internet, and you can work without worrying about subscriptions or censorship. That’s why this choice feels right for me.

Running your own LLM is easy, private, and reduces your reliance on big tech.

And for anyone trying to reduce their reliance on big tech, running your own open-source LLM ensures that you’re participating in a shift towards decentralized AI. It’s one small but crucial step towards digital sovereignty. Or perhaps you just want a weekend project to play around with. No matter your reasoning, getting your own LLM up and running is easy, and worth a shot for almost anyone.

What’s your reaction?

Love0

Sad0

Happy0

Sleepy0

Angry0

Dead0

Wink0

Leave a Reply

View Comments